Tables gallery

The following sections highlight some of the ways you can use tables:

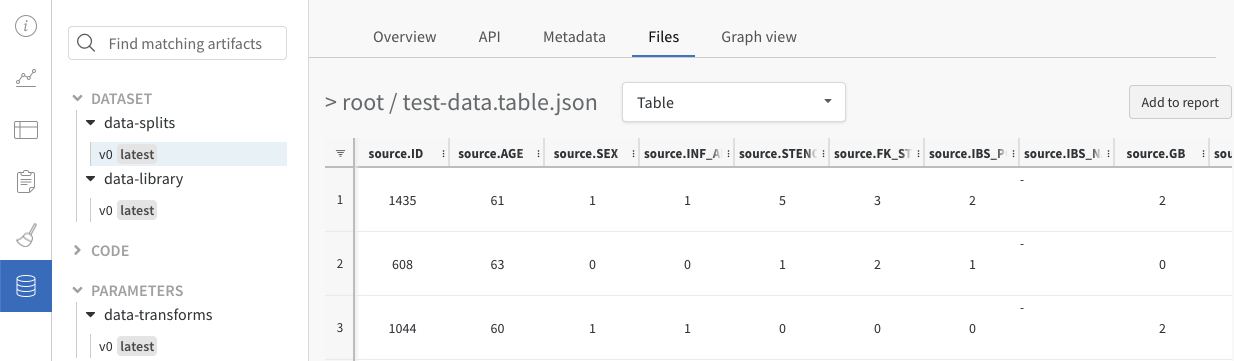

View your data

Log metrics and rich media during model training or evaluation, then visualize results in a persistent database synced to the cloud, or to your hosting instance.

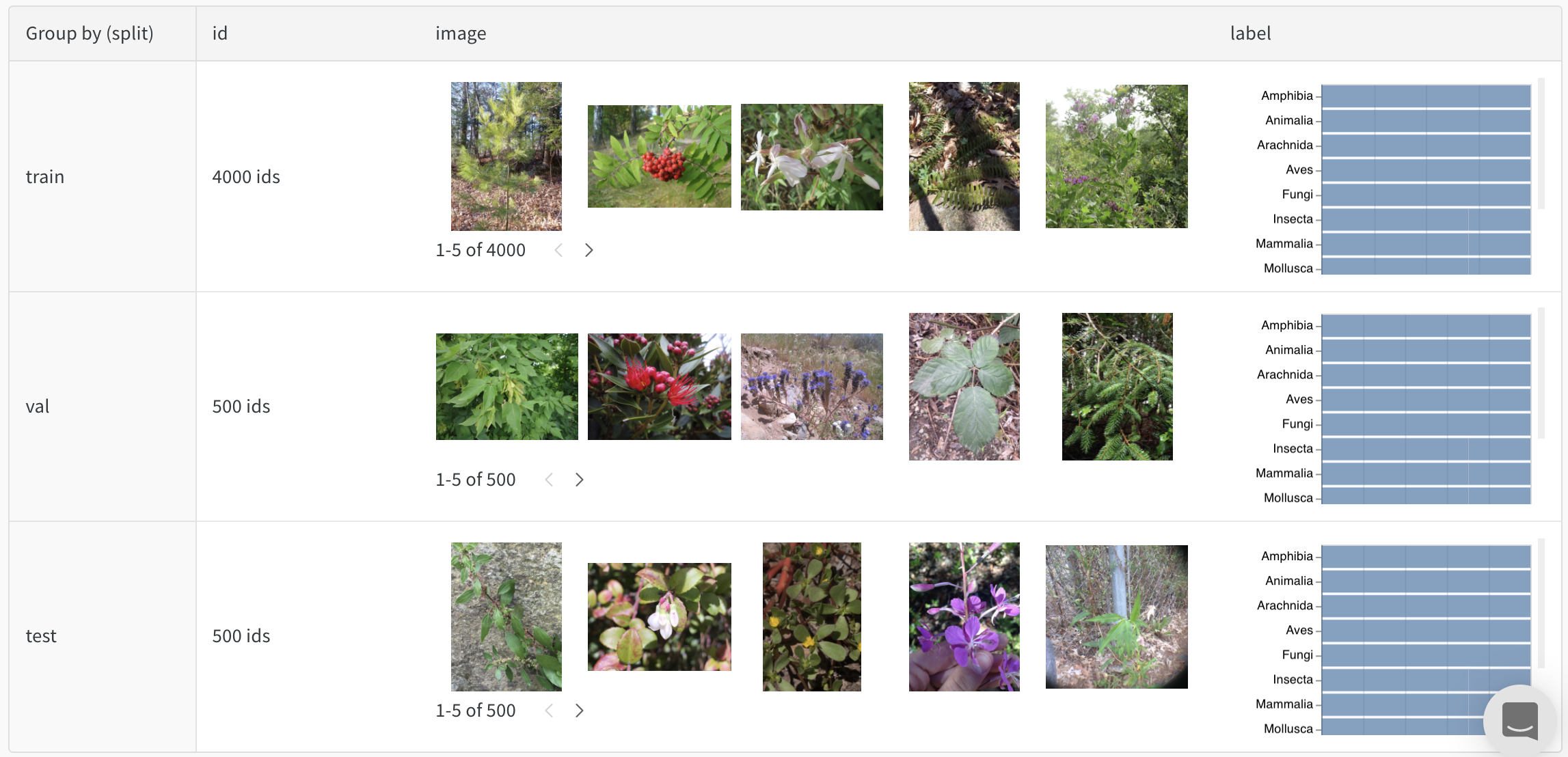

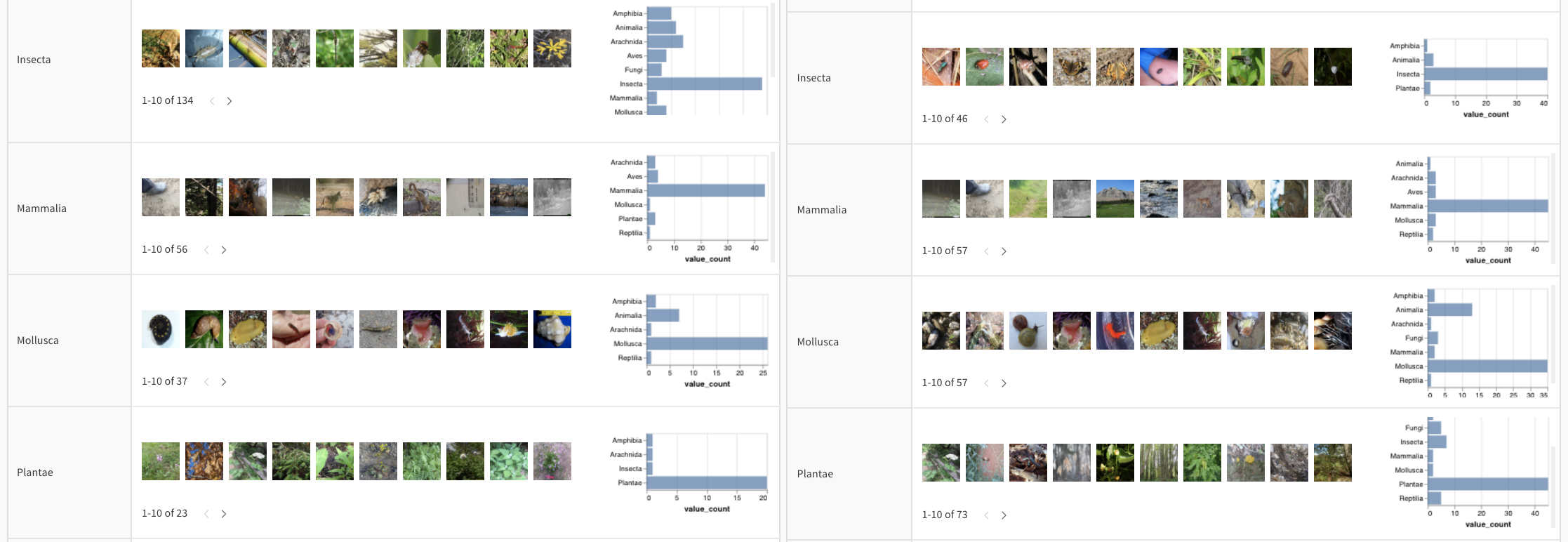

For example, check out this table that shows a balanced split of a photos dataset.

Interactively explore your data

View, sort, filter, group, join, and query tables to understand your data and model performance—no need to browse static files or rerun analysis scripts.

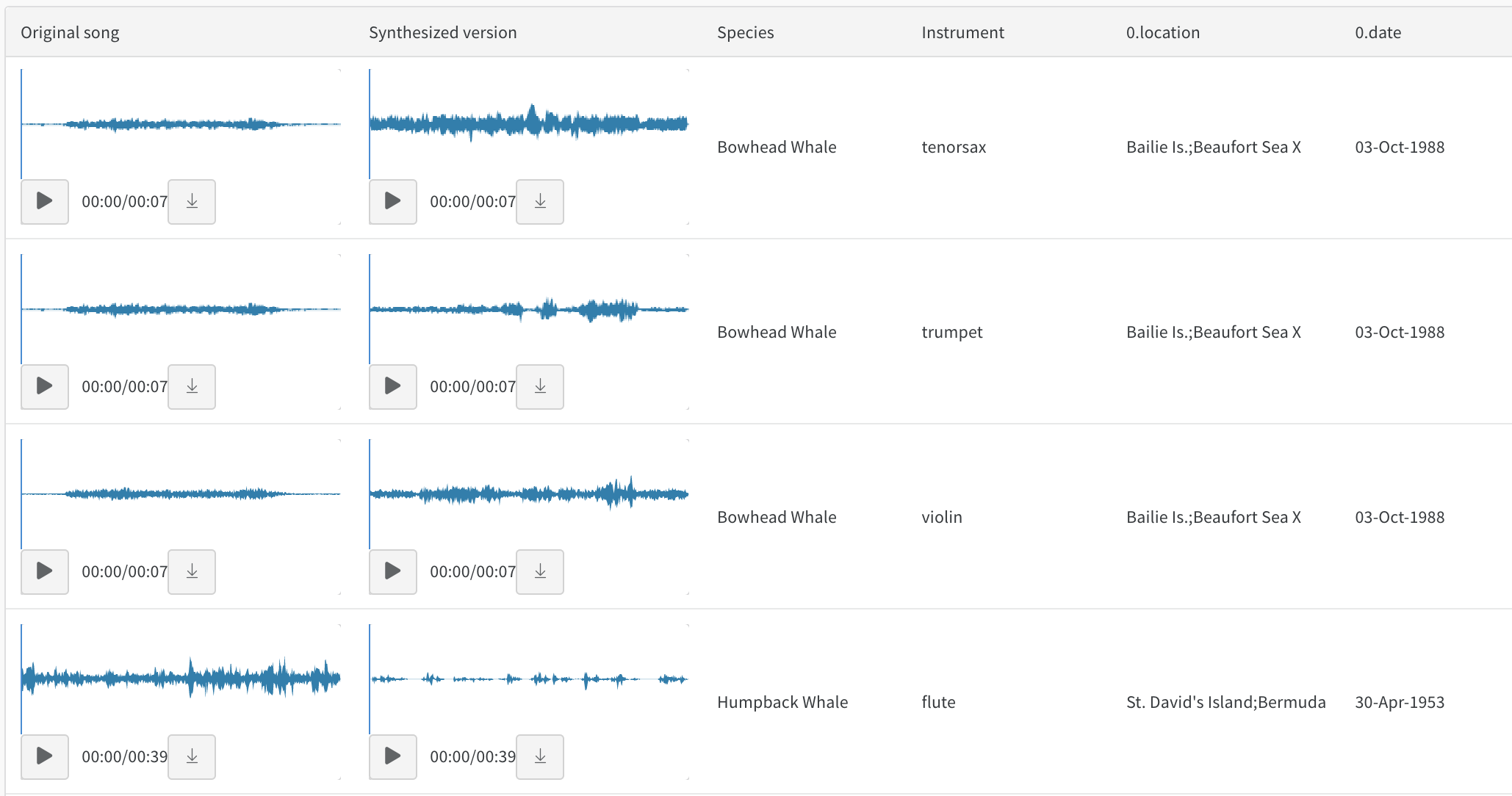

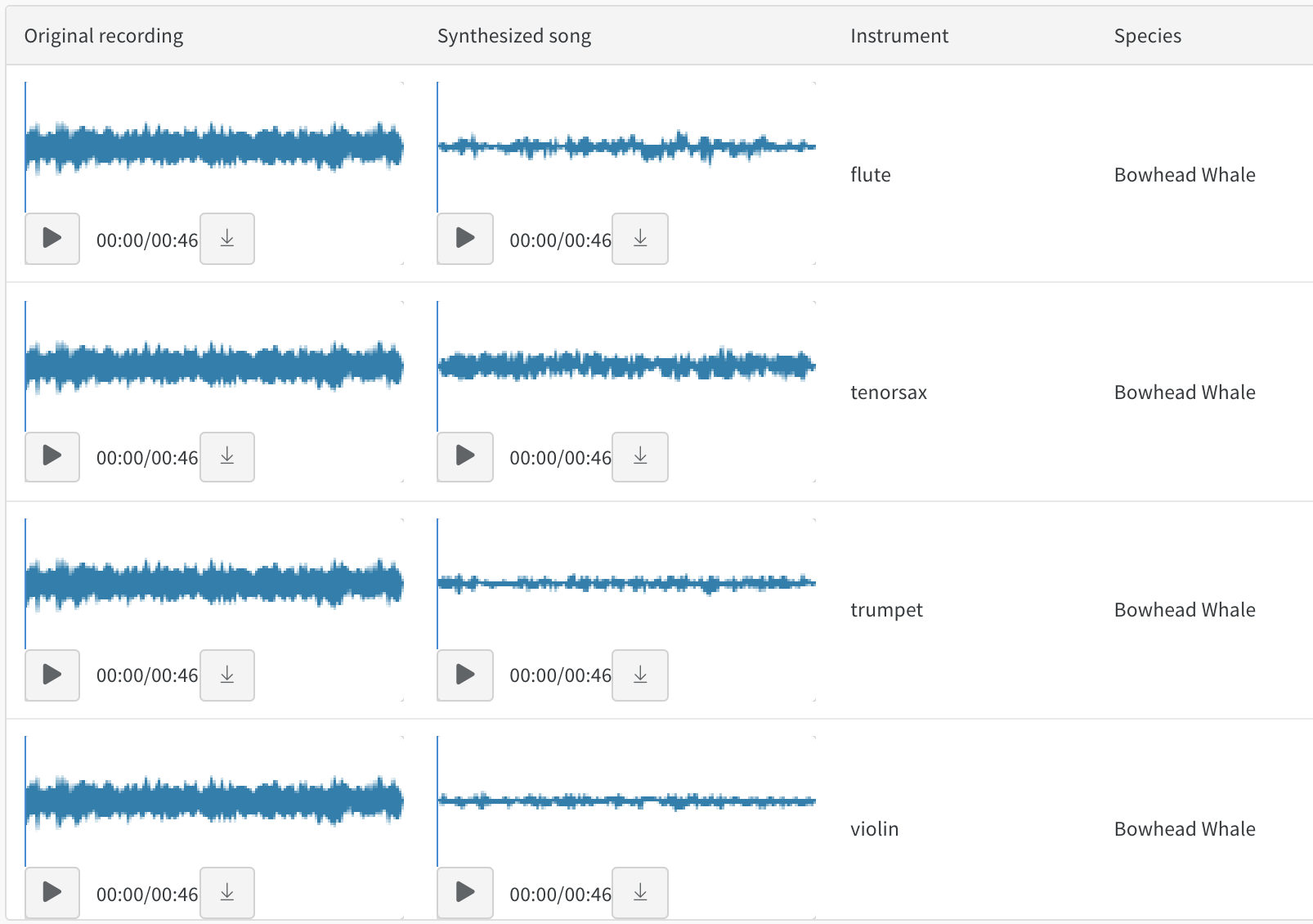

For example, see this report on style-transferred audio.

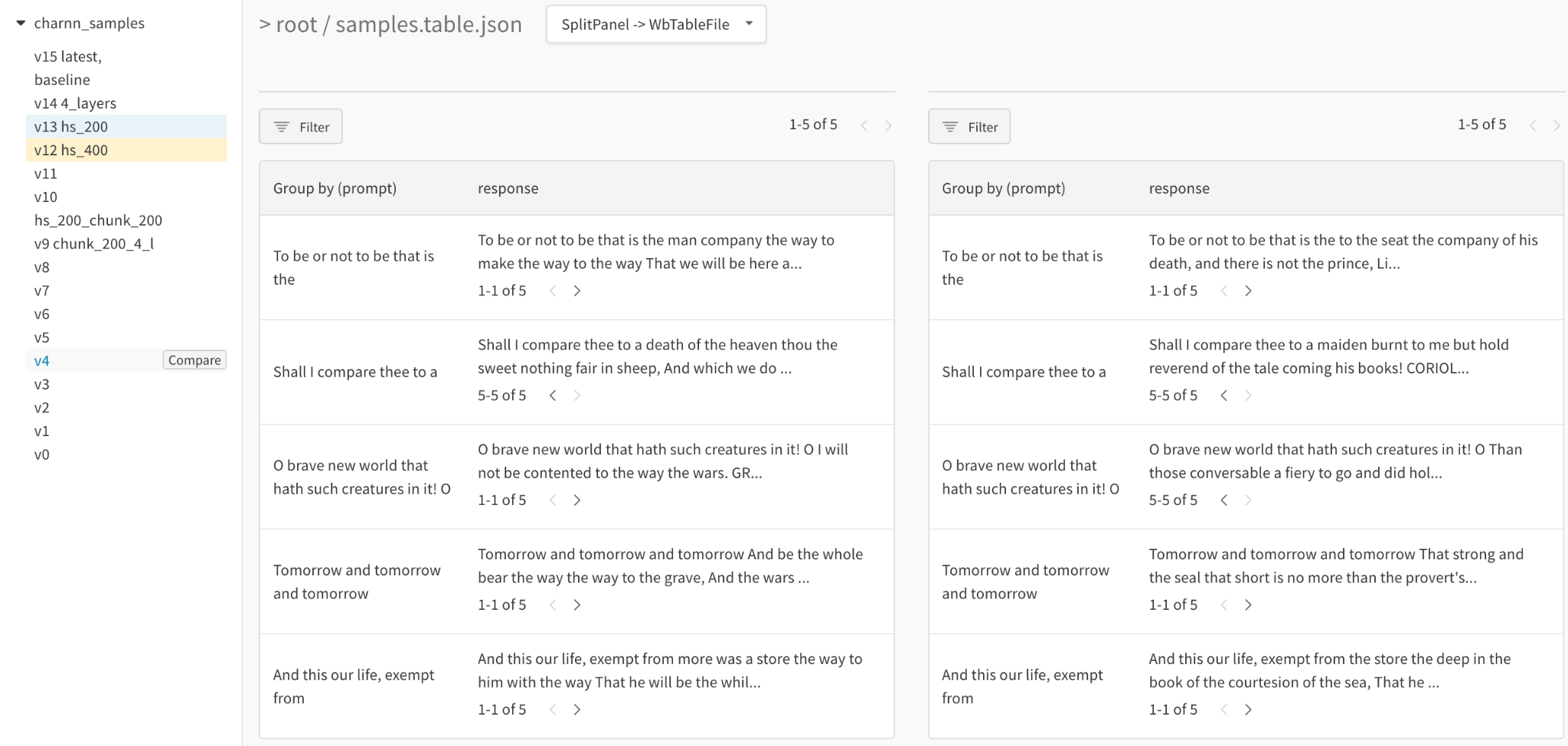

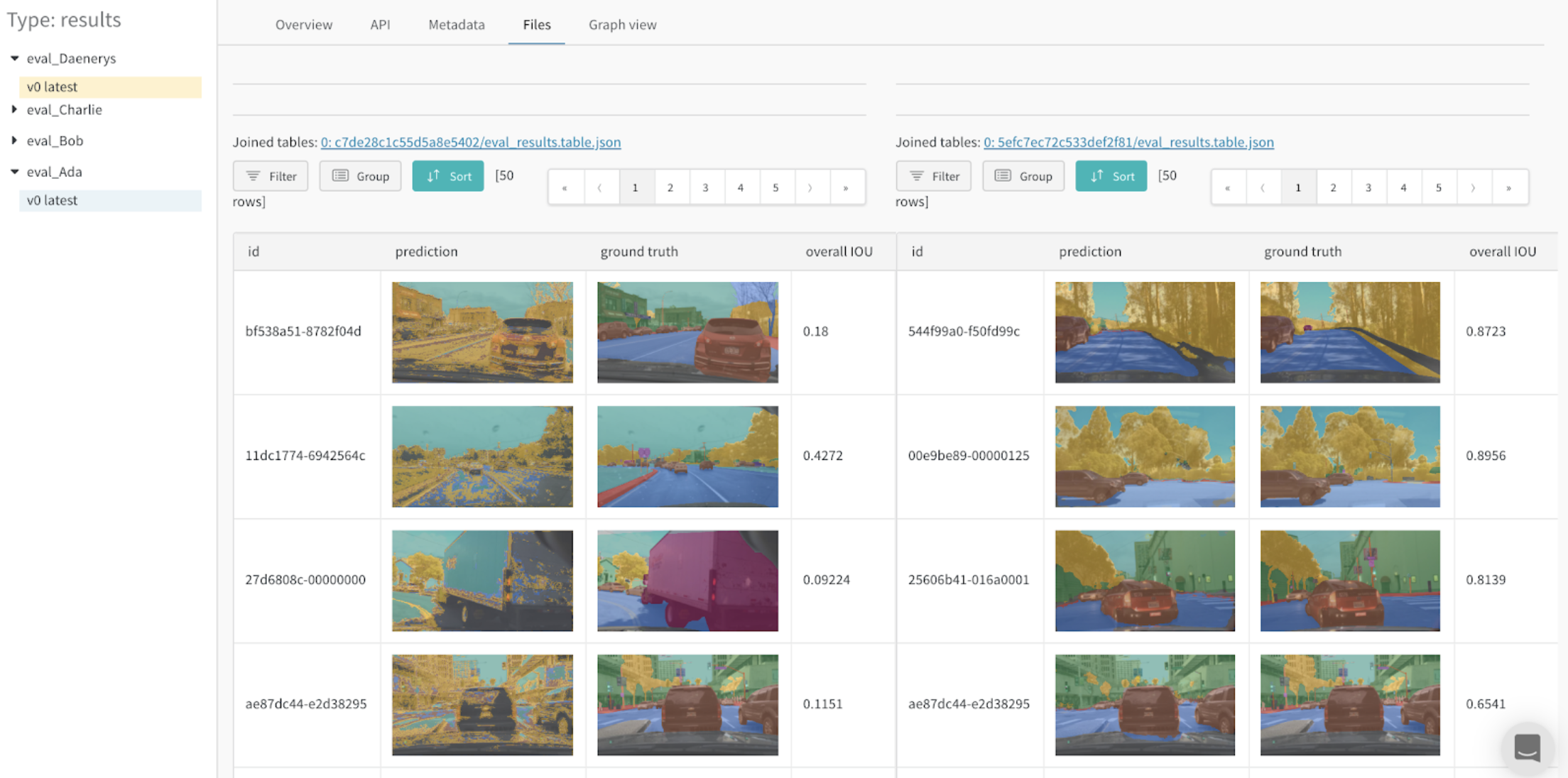

Compare model versions

Quickly compare results across different training epochs, datasets, hyperparameter choices, model architectures etc.

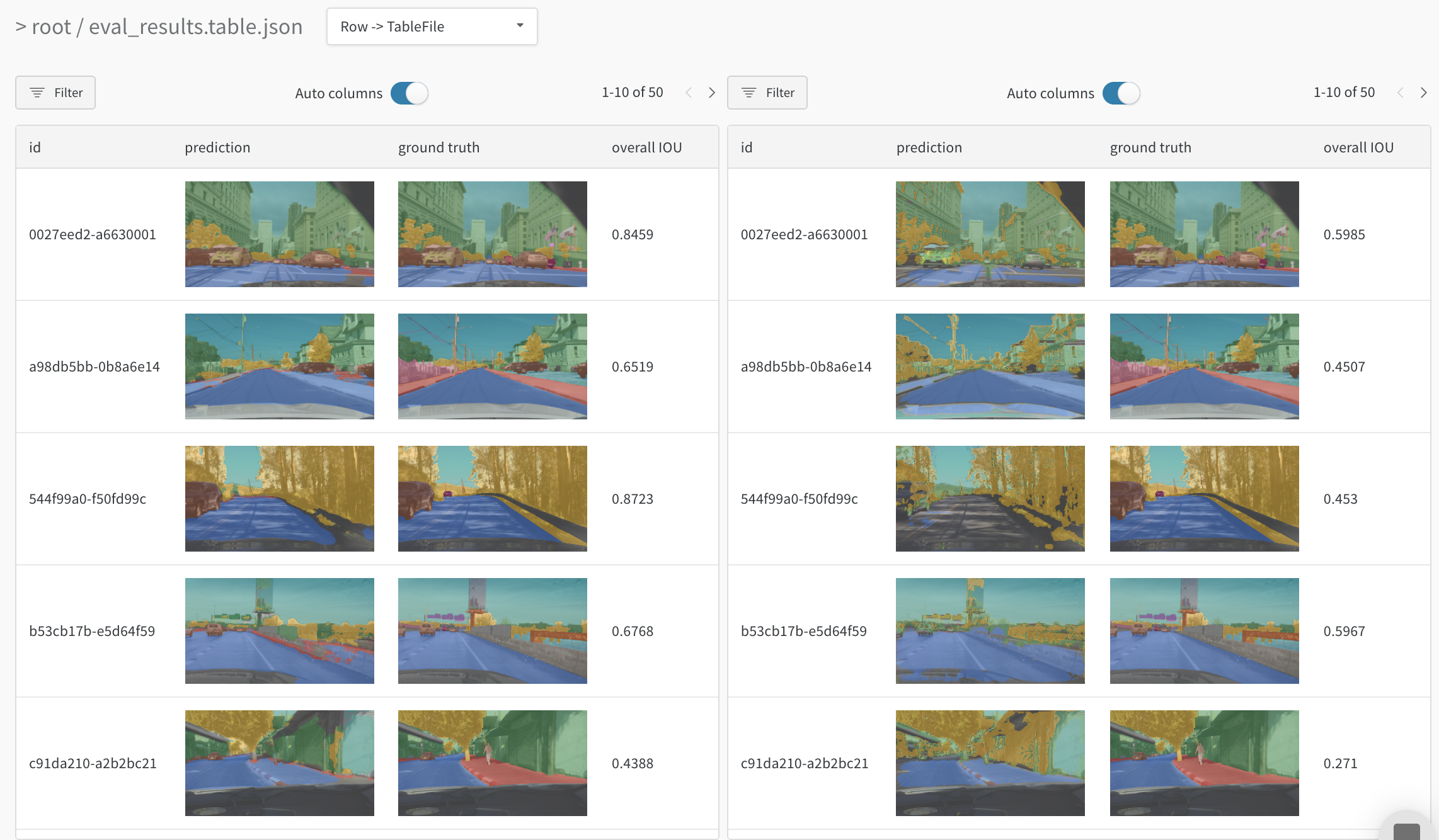

For example, see this table that compares two models on the same test images.

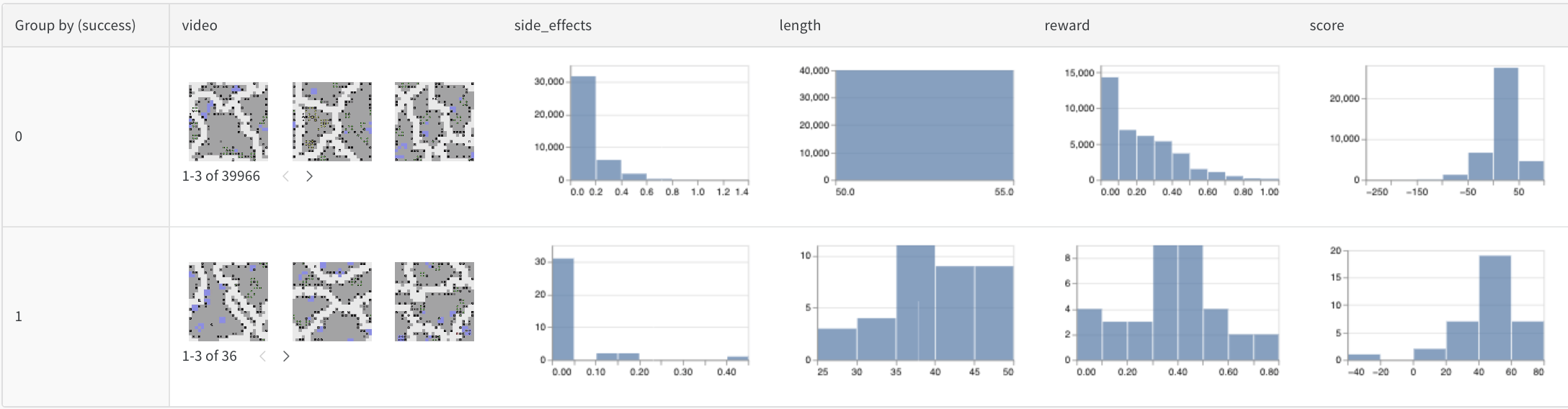

Track every detail and see the bigger picture

Zoom in to visualize a specific prediction at a specific step. Zoom out to see the aggregate statistics, identify patterns of errors, and understand opportunities for improvement. This tool works for comparing steps from a single model training, or results across different model versions.

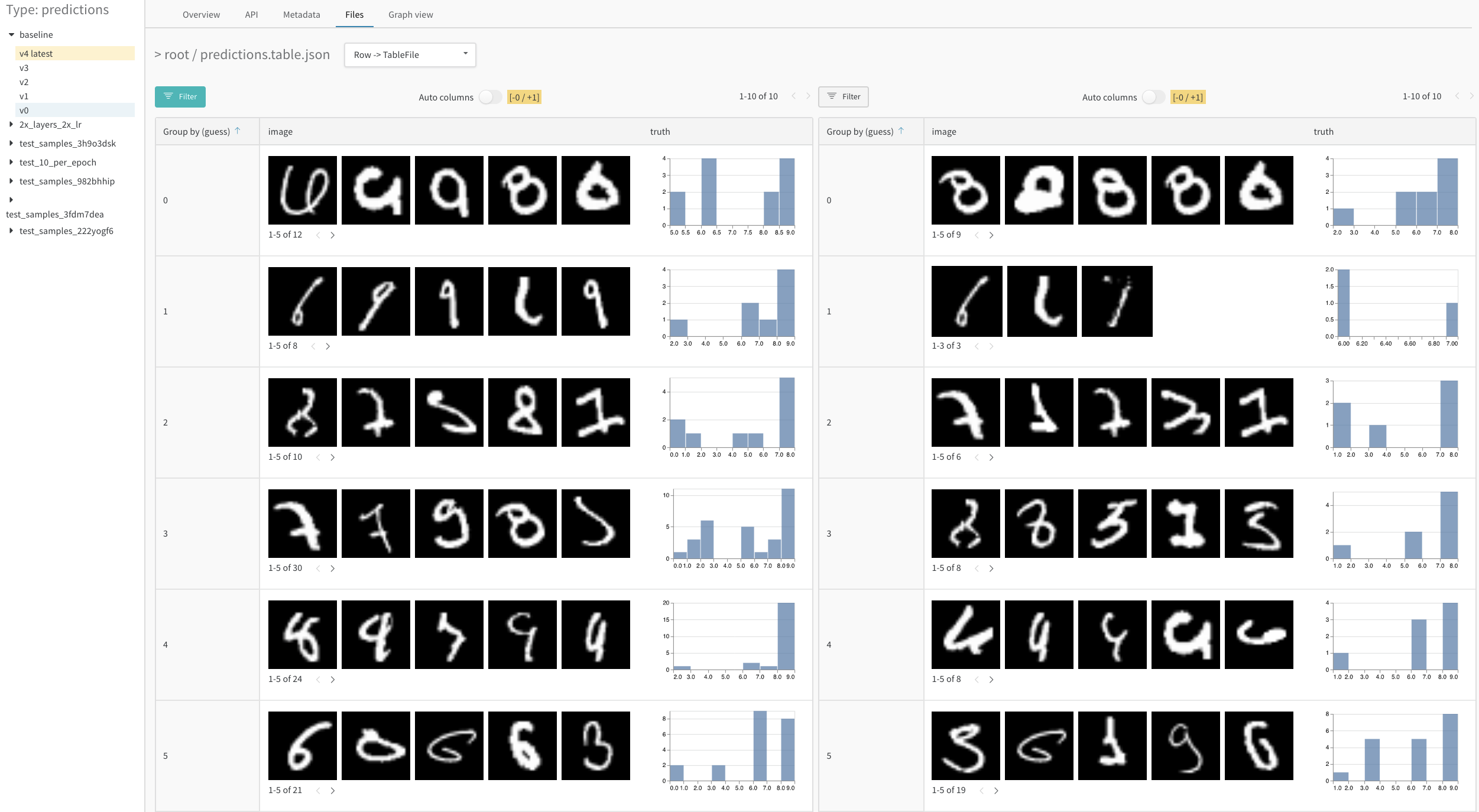

For example, see this example table that analyzes results after one and then after five epochs on the MNIST dataset.

Example Projects with W&B Tables

The following highlight some real W&B Projects that use W&B Tables.

Image classification

Read this report, follow this colab, or explore this artifacts context to see how a CNN identifies ten types of living things (plants, bird, insects, etc) from iNaturalist photos.

Audio

Interact with audio tables in this report on timbre transfer. You can compare a recorded whale song with a synthesized rendition of the same melody on an instrument like violin or trumpet. You can also record your own songs and explore their synthesized versions in W&B with this colab.

Text

Browse text samples from training data or generated output, dynamically group by relevant fields, and align your evaluation across model variants or experiment settings. Render text as Markdown or use visual diff mode to compare texts. Explore a simple character-based RNN for generating Shakespeare in this report.

Video

Browse and aggregate over videos logged during training to understand your models. Here is an early example using the SafeLife benchmark for RL agents seeking to minimize side effects

Tabular data

View a report on how to split and preprocess tabular data with version control and deduplication.

Comparing model variants (semantic segmentation)

An interactive notebook and live example of logging Tables for semantic segmentation and comparing different models. Try your own queries in this Table.

Analyzing improvement over training time

A detailed report on how to visualize predictions over time and the accompanying interactive notebook.